More Infrastructure Shenanigans

2023-12-24

I have been busy with my infrastructure since my last post. Notably, the following has happened: I changed how my offsite backups are hosted, upgraded my NAS's storage and downgraded the GPU, rack mounted stuff, built a Proxmox box, dropped Krops for deploying my machines...

It's a lot, so probably worth breaking it down. Let's start simple - I upgraded my NAS from four 4TB Ironwolf Pro drives to three 16TB Seagate Exos drives. Roughly trippling my storage even with the Raid-Z1 configuration, it's not all upside - unfortunately these new drives are significantly louder, and caused my poor little NAS to produce much more noise and rattle. This is mostly because of the choice of case for rack mounting - rather cheap, but gets the job done. We largely solved the issue by adding foam tape in strategic places, and adding some tape to the front panel bits that were rattling. It felt jank at first but works really well.

As part of our homelab upgrades, we also opted to start rack-mounting the machines. We picked up what seemed like a reasonable rack, only to quickly realise that racks designed for AV equipment are not the same as rack designed for compute. This was rather startling - after all, a rack is a rack, right? Apparently not, and after several failed attempts to find actual rails that fit we ended up with "rails" that are more shelf-like than anything. They work, and ended up working well with the foam we used for isolating the NAS, but sliding machines out is a little more rough. The rack itself is unfortunately in need of cable management, which I will eventually get to when I can bring myself to power everything down and ruin sweet, sweet uptime.

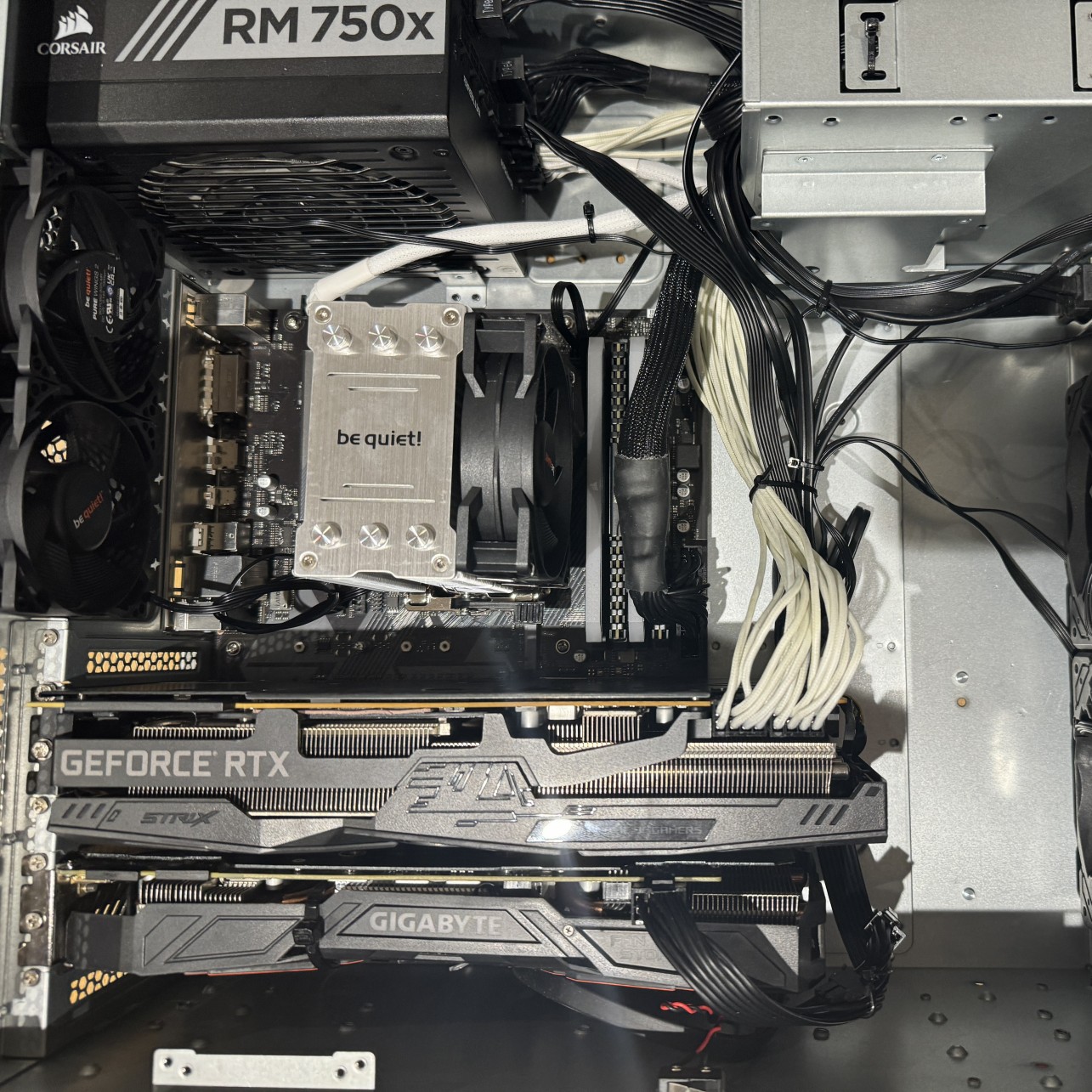

The other machine we put in the rack, besides my small Raspberry Pi Kubernetes cluster, is a new Proxmox virtualisation machine. It has some reasonable specs for running multiple virtual machines, including an AMD Ryzen 7 5700X, 64GB of memory, an RTX 2080 SUPER and GTX 1070, and a 1TB and 500GB SSD. As I explained in my last post about Proxmox, the goal of this box is to serve as a stream machine for various online events, which requires the use of Windows and GPUs for the software and encoding used. With our newly upgraded internet connection, it works really well, after we pulled out the extra 32GB of memory I added to get to 96GB of memory. Unfortunately, this caused all the memory to run at 2133MHz, rather than the 3600MHz the sticks were rated for. Right now it's running two Windows VMs for streaming (either vMix or OBS depending on the event) with one GPU assigned to each, a small machine for Immich's machine learning component, and a VM for various other things I need a machine for. I would like to give it more memory, and buff the core count, but it managed to handle a VR event with both Windows VMs working (one streaming video to the internet, the other running VRChat, ingesting some video feeds and streaming to Twitch while recording locally).

One downside of opting for Proxmox is I can't rely on Nix to configure and manage the machine. But for everything else, I've made moves to make my life much easier. I ported all my NixOS configurations to Nix flakes, and opted for nixinate to handle the deployment. It's a small wrapper around the existing Nix flake remote functionality, and has been a massive improvement over krops. For one, machines don't need their own checkout of the nixpkgs repository, which in turns means I don't need to install git manually prior to deploying from the repository. Nixinate also does some magic to work even when SSH disconnects, which is great when updating a system and the network needs to restart (Tailscale, NetworkManager, whatever it may be).

You may have noted the 5th generation Ryzen CPU in the Proxmox machine. I opted to, rather than buying new hardware for it, use the hardware that had been living in my primary workstation and instead upgrade that. Very worthwhile - I grabbed a Ryzen 7 7700X, Radeon 7900XT, Samsung 980 Pro 2TB, and 32GB of DDR5 memory at 6200MHz. There is a notable speed improvement, not just in games (where I can now crank everything) but also in day to day development. While I don't do much software development these days, focusing on ops, what software I do build binaries of are either large projects from others or written in Rust (often both). With a bit of tinkering, and the massive be quiet! CPU cooler, it can comfortably run at about 5.5GHz depending on the task.

Pivoting a bit, let's talk about connectivity. I've relied a lot on Tailscale to keep my devices connected to each other and reach the services I self host without exposing them to the internet. But as I adopt more services into my home I find some cases where I do want to expose something, especially for something like disaster recovery. For a while, I relied on a DIY solution I detailed here, but it had a few downsides. First, the tunnel I relied on connected to everything over Tailscale, which proved to be problematic when the proxy itself had its keys expire while proxying my Authentik instance, which is used for authenticating with Tailscale. I finally caved, and moved my tunnels from my DIY solution to Cloudflare Tunnels. Admittedly, I had already been using it for my partner's website, using the tunnel to expose the backend CouchDB instance then using CloudFront for the caching portion. Why not use Cloudflare directly? I'm not sure - this was likely setup around the time I was trying to get out of my comfort zone and play with the other offerings out there, so kept one foot out the door. Regardless, the free offering is too good to ignore the pull of sometimes. There were some nice advantages to moving though - I can remove reliance on Tailscale functioning for some core services, and I can add OIDC authentication in from of some of the services so I can still reach them from non-Tailscale devices but not worry about them being exposed to the internet.

As part of this move (back?) to Cloudflare Tunnels, I also reassessed my usage of the platform as a whole. Since I started using Cloudflare it has grown a lot, and offers way more than just DNS and proxying. While considering all of this, I was hired as a Site Reliability Engineer at Cloudflare, which gave me a very good excuse to dive in and start fully leveraging the platform. So far I've transplanted gmem.ca and associated endpoints & files, along with fursona.gmem.ca. artbybecki.com was moved to Cloudflare pages a little while ago, but I removed some of the hops and services it relied on, using Cloudflare's own image resizing and optimisation offerings (not Cloudflare Images) for serving the galleries - which has made it much, much faster. This website is still being served up by Fly.io directly. While I haven't finished the gossip protocol based content distribution, and at this rate it's unlikely that I will, I still like having it as a place to play with Rust and interesting ideas, so it's unlikely it will ever proxy through Cloudflare. With that said, it is likely that the images served in this blog do get uploaded to an R2 bucket or the like so I don't have to think about them.

As a quick disclaimer: Since I've been hired at Cloudflare, it's likely that I will mention their product(s) more often here and elsewhere. When I join a new company, I tend to explore the bits and pieces of their tech stack in my own time, and Cloudflare does a lot of dogfooding. So inevitably I will be talking about Cloudflare more often.

So where do I go from here?

The number one priority now is getting a custom router built up. I've played with opnsense enough at this point to feel comfortable with it, so I hope to eventually replace our Asus RT-AX82U with some custom hardware and one or two wireless access points. The main advantages will be the ability to add 2.5GBit capability to my network (which will also require a new switch - oh no! How dreadful!), and VLANs to hopefully seperate the virtual machines (and other devices). The NAS will also see some minor upgrades over time, but unlikely to be anything drastic. I'll also be evaluating my use of Tailscale, although replacing it is out of the question since I depend so much on it - Tailscale the company being VC backed is always in the back of my mind, but I haven't found anything that does exactly what it does for the same price and convenience. But who knows! Tech moves fast and I'm always eager to experiment.